Championing Responsible AI: A Global Commitment for the Communication Profession

- | [EasyDNNnews:IfExists:EventAllDay]All Day[EasyDNNnews:EndIf:EventAllDay][EasyDNNnews:IfNotExists:EventAllDay][EasyDNNnews:EndIf:EventAllDay]

Editors note: IABC and the Global Communication Certification Council (GCCC) are among the founding signatories of the Venice Pledge, a global commitment to the responsible and ethical use of AI in communication practice.

As AI begins to reshape our lives and work, the responsibility of ensuring that it aligns with our values has never been more pressing. In May, the Global Alliance for Public Relations and Communication Management (Global Alliance), of which both IABC and the GCCC are members, met in Venice, Italy, for an AI symposium focused on refining the Responsible AI Guiding Principles. Nearly a third of Global Alliance members attended the workshop to participate in and co-sign the Venice Pledge, a shared commitment to applying ethical, transparent, and human-centered approaches to AI in communication practice.

As active participants in the Venice session, we — Maliha Aqeel, SCMP, PMP, representing IABC, and Bonnie Caver, SCMP, IABC Fellow, representing the GCCC — were proud to sign the Venice Pledge as founding signatories. In this article, we share key insights from the session and explore what the updated responsible AI principles mean for our profession.

Strengthening the Principles

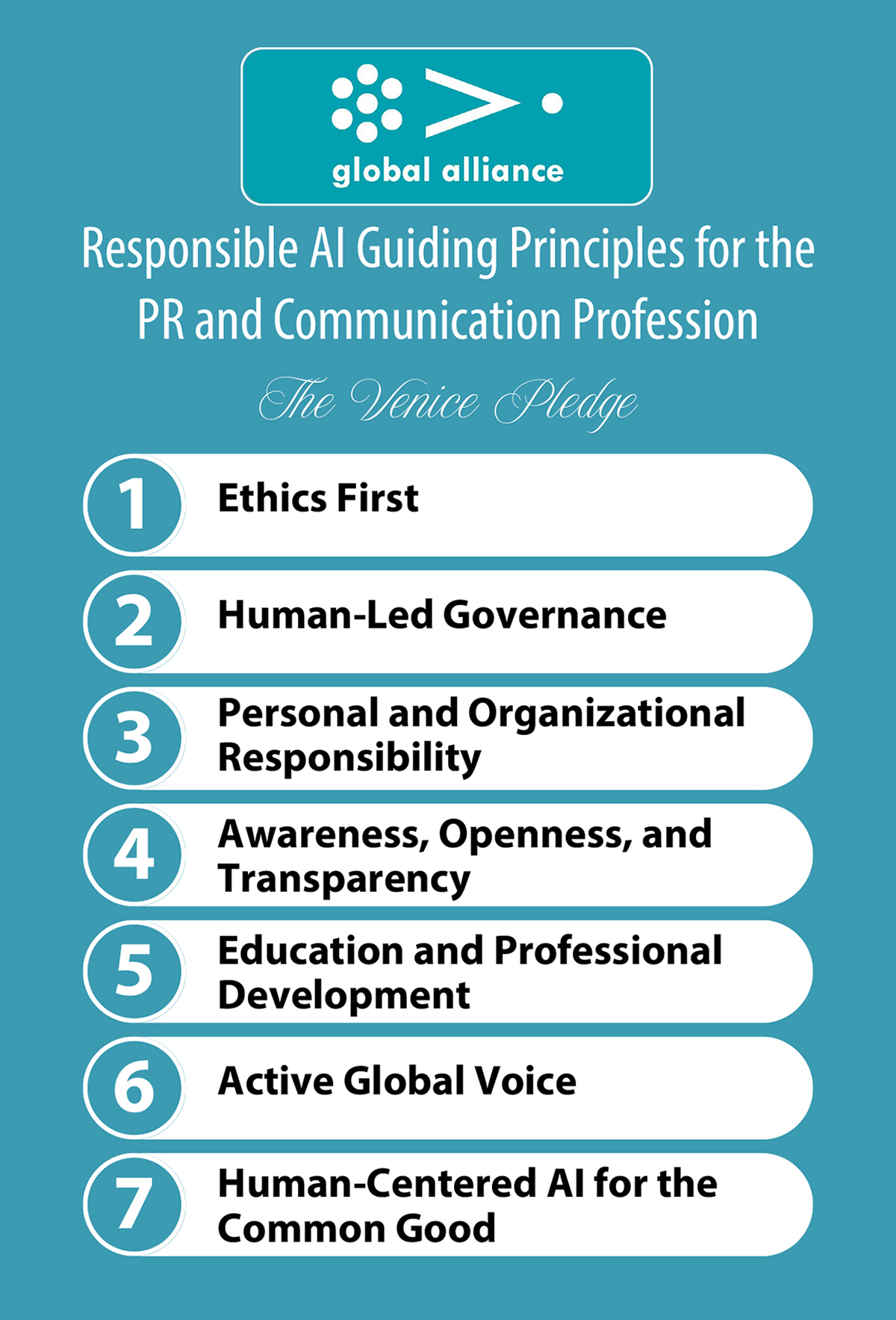

The Venice workshop resulted in a shared definition of responsible AI, updated guiding principles, and the introduction of a seventh principle: Human-Centered AI for the Common Good. Together, these principles offer member organizations — and the global public relations (PR) and communication profession — a unified framework and voice for the responsible use of AI. They also serve as a guide for how we apply AI in our work, provide strategic counsel, and shape its broader societal impacts.

These principles mark a significant milestone for the PR and communication professions, marking the first time a global industry has established unified guidelines on responsible AI. This achievement offers communication professionals immediate credibility to lead informed conversations within their own organizations and with external stakeholders.

A Responsible AI Definition

The Global Alliance defines responsible AI as the ethical, transparent, and human-centered development and application of artificial intelligence, strategically deployed to support, not replace, human judgment, creativity, and communication. It emphasizes accountability, fairness, and accuracy while minimizing bias, misinformation, and harm. Responsible AI focuses on mitigating the risks of AI by upholding privacy and data protection, reflecting professional and organizational values, and ensuring proper attribution, governance, and human oversight to protect trust, integrity, and societal well-being.

The 7 Responsible AI Guiding Principles

- Ethics First

- Human-Led Governance

- Personal and Organizational Responsibility

- Awareness, Openness, and Transparency

- Education and Professional Development

- Active Global Voice

- Human-Centered AI for the Common Good

Why Human-Centered AI Is a Starting Point

In co-developing principle seven — Human-Centered AI for the Common Good — the working group led by Maliha focused on moving beyond responsible use and governance toward an ethical imperative: AI must serve people, not just systems. As communication professionals, we are uniquely positioned at the intersection of technology, society, and trust. This means we are responsible not only for how we use AI in our work, but also for how we influence its adoption across institutions and communities.

Our discussion around principle seven began with a simple yet powerful premise: AI should enhance human dignity, not diminish it. It must reflect human values, cultural context, and the lived realities of those it affects. This principle reminds us that we need AI-powered systems that are inclusive by design, equitable in outcomes, and accountable in application.

While AI holds extraordinary potential to address social challenges, we must also acknowledge its environmental impact and the societal disruptions it may cause, such as job displacement and growing inequality. This requires vigilance in how we communicate its risks and benefits, a commitment to advocating for AI that supports inclusion and prosperity, and the courage to speak out against use cases that may cause harm.

This is not an abstract ideal but a practical responsibility for our profession. While we may not build or deploy AI systems ourselves, we play a critical strategic role in shaping how AI is understood, communicated, and governed within our organizations and in society. By prioritizing human needs and shared prosperity in our messaging and counsel, we can help ensure AI evolves as a tool for meaningful progress, not just efficiency or profit.

What’s Next

As AI continues to evolve, we must ensure that our guiding principles evolve in tandem with it. The Global Alliance is committed to ongoing monitoring of AI in the communications profession, with an official annual review scheduled for May 2026.

Now it’s your turn to join the Responsible AI Movement. Read the principles, sign the Venice Pledge, and start conversations in your organization and professional network. Champion responsible, human-centered AI in every aspect of your work and help shape the future of our profession by influencing organizational policy and supporting initiatives that prioritize human-centered values.